As Seoul races to build GPU-rich AI infrastructure, the real test lies in electricity supply, cooling technology, and regulatory reform — not just compute capacity.

South Korea is sprinting toward its goal of becoming an AI powerhouse. Tech giants and policymakers alike see artificial intelligence as the country’s next industrial engine — a natural evolution from its dominance in semiconductors, displays, and 5G. The scale of ambition is immense: major corporations are ordering hundreds of thousands of GPUs, and the government is backing plans for national AI computing centers and hyperscale “AI factories.”

Yet there’s a growing gap between vision and capacity. Every new GPU cluster demands massive, continuous power — and South Korea’s grid wasn’t designed for this scale or density. In the Seoul metropolitan area, developers face grid congestion, slow permitting, and cooling limitations. The country’s AI future, it turns out, may hinge less on how many chips it buys and more on how many watts it can deliver sustainably.

Why Power Is the Bottleneck

A growth story hitting physical limits

Korea’s data-centre market is on track to more than double in value by the end of the decade. Hyperscale projects are expanding fast, and IT-load capacity could triple as AI workloads grow. But the challenge is no longer digital — it’s physical. Building GPU clusters is easy; powering and cooling them is not.

Unlike traditional workloads, AI infrastructure consumes energy continuously and with little tolerance for downtime. Training a large model requires uninterrupted, high-density power and advanced cooling systems. For utilities and regulators, this means a sudden jump in baseload demand without equivalent time for grid upgrades.

An infrastructure designed for yesterday’s economy

Korea’s power system is still optimised for heavy manufacturing, not 24/7 digital computation. Electricity planning cycles stretch years ahead, while data-centre construction now moves in months. In Seoul, adding one large facility can require new substation approvals and transformer installations — a process often slower than the AI investment cycle itself.

Several structural factors intensify the strain:

- Transmission bottlenecks between industrial and urban zones.

- Limited renewable integration, due to slow permitting and grid interconnection.

- Centralised control by KEPCO, leaving little flexibility for private producers to supply clean energy directly.

The result: even as investment pours in, the grid’s capacity to absorb it lags behind. Without power security, Korea’s AI ecosystem risks building impressive hardware that runs below potential — or worse, sits idle.

The invisible cost of density

AI-optimised data centres pack dozens of GPUs into each rack, creating energy densities that can exceed 40 kilowatts per rack. That density doesn’t just raise electricity use — it multiplies cooling requirements. Traditional air cooling becomes inefficient at that scale, pushing operators to adopt liquid and immersion cooling systems. But these technologies need redesigns of existing facilities and specialised engineering talent, both in short supply.

In short, Korea’s AI infrastructure challenge isn’t just about scaling up — it’s about redesigning how digital infrastructure interacts with energy systems.

How Korea Is Responding

1. Turning cooling into a competitive advantage

Korean telecom and cloud operators are experimenting with new thermal management systems to make dense GPU clusters feasible. LG Uplus, LG CNS, and others are piloting AI-controlled liquid-cooling setups that can cut energy use for cooling by up to 10%. This may sound incremental, but across hundreds of racks, it translates to significant power savings.

What’s notable is the strategic shift: cooling is no longer seen as an operational detail but as a frontline innovation area. Success in cooling technology could allow Korea to host more compute per megawatt — effectively stretching limited power resources further.

2. Building where the power is

With grid saturation around Seoul, developers are moving toward regions with easier access to energy — near LNG plants, coastal ports, or industrial hubs. The upcoming Busan AI Data Centre, designed for up to 120 MW, exemplifies this trend. By co-locating with energy infrastructure, operators reduce dependency on congested metropolitan grids.

Some projects are integrating micro-grids, on-site gas turbines, or battery storage to guarantee steady supply. Others are signing long-term power purchase agreements (PPAs) to secure renewable sources, though regulatory hurdles still make that complex in Korea. The direction, however, is clear: future AI facilities will be planned around energy, not just network connectivity.

3. Policy catching up with technology

Policymakers have begun acknowledging that data centres are no longer peripheral — they’re critical infrastructure. Korea’s Energy Master Plan aims for 70% carbon-free power generation by 2038, setting a long-term target for cleaner, more diversified energy. But short-term reforms matter more now:

- Accelerating grid connection approvals for digital infrastructure.

- Allowing corporate PPAs to source renewable energy directly.

- Modernising transmission networks to support distributed, high-load sites.

These changes will decide whether Korea’s AI build-out remains concentrated around Seoul or spreads into regional “AI energy clusters.”

How Korea Compares Globally

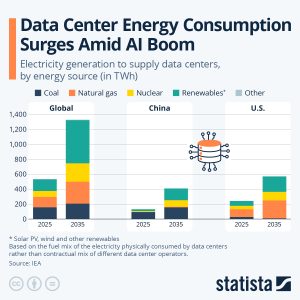

Korea’s dilemma mirrors a global trend: AI workloads are stressing electricity systems everywhere. The IEA forecasts global data-centre consumption to more than double by 2030, driven primarily by AI. In the U.S., interconnection queues for new power demand already exceed grid capacity. Europe faces carbon-emission caps that complicate expansion. China, meanwhile, can build faster but at high environmental cost.

Where Korea stands out is in its geographic compression and industrial concentration. The country’s major data-centre zones are clustered within a few hundred kilometres, magnifying the impact of every power constraint. Yet its engineering depth and manufacturing base also give it unique tools to innovate — particularly in cooling, battery storage, and semiconductor-level efficiency.

In effect, Korea is testing whether a highly urbanised, energy-constrained economy can still lead the world in AI infrastructure — not by scale, but by efficiency per watt.

What Success Looks Like

The next phase of Korea’s AI growth will be defined not by how much compute it builds, but how intelligently it uses power. A successful energy-aware infrastructure strategy would include:

- Optimised site design: New data centres built near stable power sources and designed with liquid or immersion cooling from the start.

- Integrated power planning: Coordination between KEPCO, the Ministry of Science and ICT, and private developers to align grid investment with AI demand forecasts.

- Smarter operations: Use of AI for predictive maintenance, cooling control, and power balancing across distributed facilities.

- Sustainability metrics: Tracking energy intensity and emissions per unit of AI compute — not just total megawatts deployed.

If Korea achieves this, it can position itself as a model for “efficient AI sovereignty” — maintaining domestic control over compute capacity without overstraining its energy system.

But if power reforms and cooling innovation lag, the outcome could be paradoxical: a nation with world-class chips, ambitious AI policies, and data centres that can’t run at full power.

Conclusion

The global AI race is no longer just about algorithms and hardware; it’s about energy. South Korea, with its advanced industrial ecosystem, finds itself on the frontline of this transition. Its success will depend on how quickly policymakers, utilities, and technology firms can turn energy efficiency into a competitive advantage.

Every GPU Korea installs represents both innovation and obligation — to generate, deliver, and cool the power that fuels it. The next frontier in AI infrastructure isn’t silicon; it’s sustainability. And whether Korea leads or lags will depend not on how many chips it buys, but on how wisely it powers them.