Efficiency-first design targets agentic AI adoption beyond big tech firms

Kakao said on Jan. 20 that it has upgraded its proprietary large language model, Kanana-2, and released four additional variants as open source, extending its effort to position the model as a practical foundation for agentic artificial intelligence. The update follows Kakao’s initial decision to open-source Kanana-2 in December via Hugging Face, where the model attracted attention for combining relatively strong performance with lower computational demands.

Kakao framed the update as a move to improve usability rather than simply scale size. The company said the revised models are designed to run efficiently on widely used hardware such as Nvidia A100-class GPUs, making them accessible to small and midsize businesses, startups, and academic researchers that may lack the resources required for cutting-edge infrastructure.

Architectural changes aimed at efficiency

At the core of the update is a stronger focus on compute efficiency. Kanana-2 uses a mixture-of-experts (MoE) architecture, in which only a subset of the model’s parameters is activated during inference. While the model has a total of 32 billion parameters, Kakao said only around 3 billion are used at a time, significantly lowering computational cost without a corresponding drop in performance.

Kakao also introduced a new “mid-training” phase between pretraining and post-training. This additional stage is designed to give the model new reasoning abilities while preserving existing knowledge, addressing the common problem of models losing earlier capabilities as they learn new tasks.

Four models for different use cases

The updated open-source release includes four variants, each targeting a specific type of use:

- Base: a general-purpose foundation model

- Instruct: tuned for instruction-following tasks

- Thinking: optimized for reasoning-heavy workloads

- Mid-training: aimed at research and continued adaptation

Kakao said all four models emphasize cost efficiency while strengthening tool-calling capabilities, a key requirement for agentic AI systems that need to act, not just respond.

Focus on agentic AI, not chatbots

Unlike conventional conversational models, Kanana-2 is designed to support agentic AI, which can interpret complex user requests, decide which tools to use, and execute multi-step actions. Kakao said the models were trained on extensive multi-turn datasets centered on tool usage, enabling them to autonomously select and operate external tools in real-world scenarios.

This design choice reflects a broader shift in the AI field, where developers are increasingly focused on systems that can perform tasks—such as retrieving data, executing workflows, or interacting with software—rather than only generating text.

Benchmark results and positioning

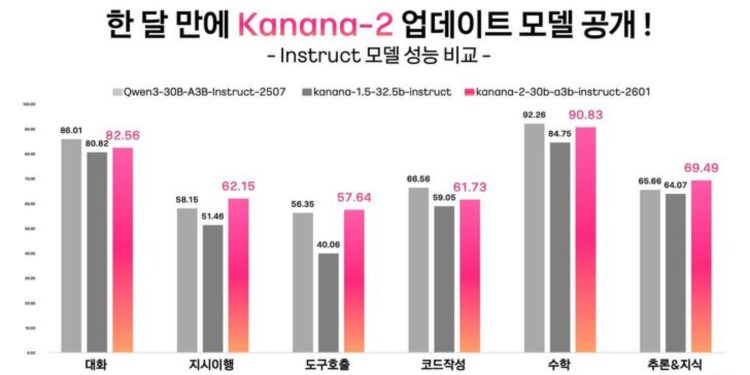

In benchmark testing, Kakao said the updated Kanana-2 models outperformed a peer open-source model, Qwen-30B-A3B-Instruct-2507, in instruction-following accuracy, multi-turn tool-calling, and Korean-language tasks. While Kakao did not position the results as a definitive ranking, it argued that the comparisons show efficiency-focused models can remain competitive on core capabilities.

Industry observers note that such positioning reflects a growing emphasis on “good enough” performance combined with lower cost, rather than pursuing ever-larger models at higher expense.

Lowering barriers to AI adoption

Another central theme of the update is accessibility. Kakao said Kanana-2 is optimized to operate on general-purpose GPUs rather than requiring the latest high-end accelerators. This approach is intended to reduce barriers for organizations that want to deploy advanced AI but face budget or infrastructure constraints.

Kim Byung-hak, performance lead for Kakao’s Kanana project, said the goal was to build practical agentic AI without relying on costly hardware. “By open-sourcing high-efficiency models that work on general infrastructure, we hope to offer a new alternative for AI adoption and support the growth of Korea’s AI research ecosystem,” he said.

Kakao added that it is continuing development of a larger 155-billion-parameter model, signaling that it is pursuing both efficiency-oriented releases and longer-term ambitions to compete at the top tier of global AI development.