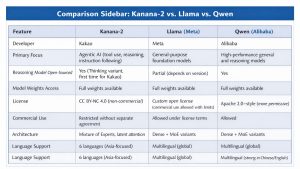

Release includes reasoning-focused model and full weights, but commercial use remains restricted

Kakao has released Kanana-2, its latest in-house large language model (LLM), as open source, expanding its push into agentic artificial intelligence. The company said the model has been published on Hugging Face and is designed to help developers build AI systems that can understand context, make decisions, and carry out tasks with less human intervention.

The release marks a notable shift in Kakao’s AI strategy, particularly because it includes a reasoning-focused model and full public access to model weights. While the company has previously open-sourced smaller or task-specific models, this is the first time it has opened a reasoning-capable LLM for external fine-tuning.

Three Models, Distinct Roles

Kakao has released three variants under the Kanana-2 family:

- Base, aimed at general-purpose language tasks

- Instruct, optimized for instruction-following through post-training

- Thinking, designed for advanced reasoning workflows

The Thinking model is the most significant addition, as it represents Kakao’s first open-source reasoning model. Developers can access full weights to adapt the models using their own datasets, although usage is limited to research and non-commercial purposes.

Focus on Agentic AI Capabilities

According to Kakao, Kanana-2 was developed with agentic AI systems in mind. These systems rely on models that can interpret intent, call external tools, and complete multi-step tasks over extended interactions. The company said Kanana-2 delivers major gains in these areas compared with its previous generation.

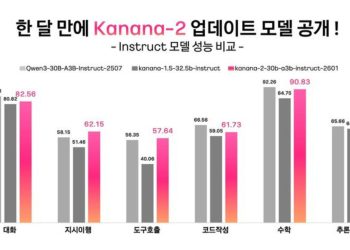

Internal tests showed more than a threefold improvement in multi-turn tool-calling performance over Kanana-1.5, a capability considered central to AI agents that need to plan and act across several steps rather than respond to single prompts.

Architectural Changes Aim to Improve Efficiency

Kanana-2 introduces architectural updates intended to balance performance with efficiency. Kakao said it adopted multi-head latent attention to better handle long input sequences, along with a mixture-of-experts (MoE) structure that activates only parts of the model during inference.

This design reduces computing load and response time, making the model more suitable for agent-based systems that need to operate continuously or at scale.

Multilingual Expansion Targets Regional Use Cases

The model supports six languages—Korean, English, Japanese, Chinese, Thai, and Vietnamese—broadening its potential use across Asia. Kakao said the expanded language support reflects its focus on building AI agents that can operate in multilingual and cross-border environments, rather than being limited to domestic use cases.

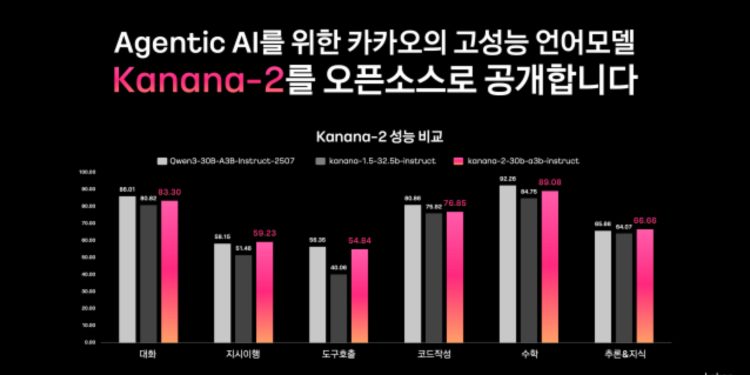

Benchmarks and External Comparisons

Kakao said internal evaluations indicate that the Kanana-2 Instruct model delivers performance broadly in line with large open models such as Alibaba’s Qwen3-30B-A3B. The company added that the Thinking variant demonstrated solid capabilities in multi-step reasoning tasks, reinforcing its suitability for reasoning-focused use cases.

However, Kakao did not disclose independent third-party evaluations, and broader adoption is likely to depend on how the model performs in real-world deployments.

Open, but Not Fully Free

Despite being open source, Kanana-2 is released under a Creative Commons Attribution–NonCommercial 4.0 license. This restricts commercial use, meaning developers cannot build revenue-generating products without negotiating a separate license with Kakao. The approach mirrors licensing strategies used by other major AI model providers that balance openness with commercial control.

Kim Byung-hak, performance lead for the Kanana project, said Kakao’s focus is on practical deployment rather than headline benchmarks. “Innovative AI services depend on both performance and efficiency,” he said. “Beyond raw capability, we aim to build models that can operate effectively in real services and share them openly to support the global AI research ecosystem.”

Why It Matters for Developers

- Reasoning model access: This is Kakao’s first open release of a reasoning-focused LLM with full weights, enabling deeper fine-tuning and research.

- Agent-ready design: Strong gains in tool calling and instruction following make Kanana-2 suitable for building AI agents, not just chatbots.

- Efficiency-first architecture: MoE and latent attention reduce compute costs, which matters for experimentation at scale.

- Licensing trade-off: Non-commercial terms limit startup and product use unless a separate license is secured, which may slow wider adoption.