Researchers at the Korea Advanced Institute of Science and Technology (KAIST) created an artificial intelligence-based tool dubbed the “Kaicatch” to detect computer-manipulated images known as “deepfakes.” The South Korean research-oriented science and engineering institution developed the software with Professor Lee Heung-kyu as the project leader.

The Kaicatch software ranks as the nation’s first commercial deepfake detection software system. Professor Lee Heung-kyu said that Kaicatch could identify and analyze visual disinformation and artificial changes that would instead seem normal to people.

The institute created the software to address the increasing number of fake photos and videos that could present potential problems. The research team trusts that the new software could verify an image’s validity.

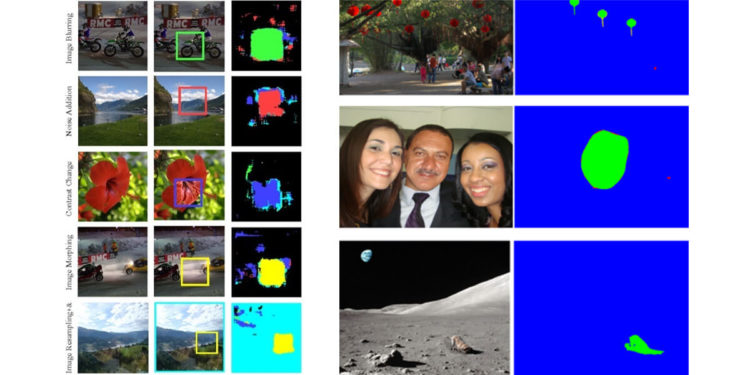

Lee said that the research consisted of many tests to conclude the photos’ authenticity. However, not all successful results have become useful since the studies concentrated only on specific manipulation types. Such manipulations include image blurring, morphing, copying, and pasting.

The professor also said that past researches accomplished a high accuracy rating in verifying a particular alteration used. But in cases where the conditions are unidentifiable, the accuracy gets compromised.

AI Learning Capabilities

The Kaicatch software brings together numerous algorithms to detect and identify synthetic photos, including video forensic and steganography analysis. The software also utilized a public data set of around 300,000 images and video data to develop the detection tool.

The detection tool uses two different machine learning engines capable of recognizing altered parts to consider the types of techniques that manipulated images. Professor Lee also stated that the research team would further improve its capabilities to detect sophisticated changes made to photos and videos.

Deepfake images increased and led to Korea’s controversies like the “Nth room” chat room scandal last year. Deepfake pornography used celebrities’ faces and illegally distributed the videos since 2018. This situation led to the development of deepfake detection tools to fight visual disinformation.

High-tech startups also joined a government-led project to confirm data required to create a detection tool, including MoneyBrain, a Seoul-based startup. The government pledged 2.6 billion won (US$2.3 million) for the development of the project in six months.